@Huy DuongWe've recently sent out a security notification regarding the same.

1. Stop further attacks:

a. Use Firewall / IP table settings to allow access only to whitelisted IP addresses for Resource Manager port (default 8088). Do this on both Resource Managers in your HA setup. This only addresses the current attack. To permanently secure your clusters, all HDP end-points ( e.g WebHDFS) must be blocked from open access outside of firewalls.

b. Make your cluster secure (kerberized).

2. Clean up existing attacks:

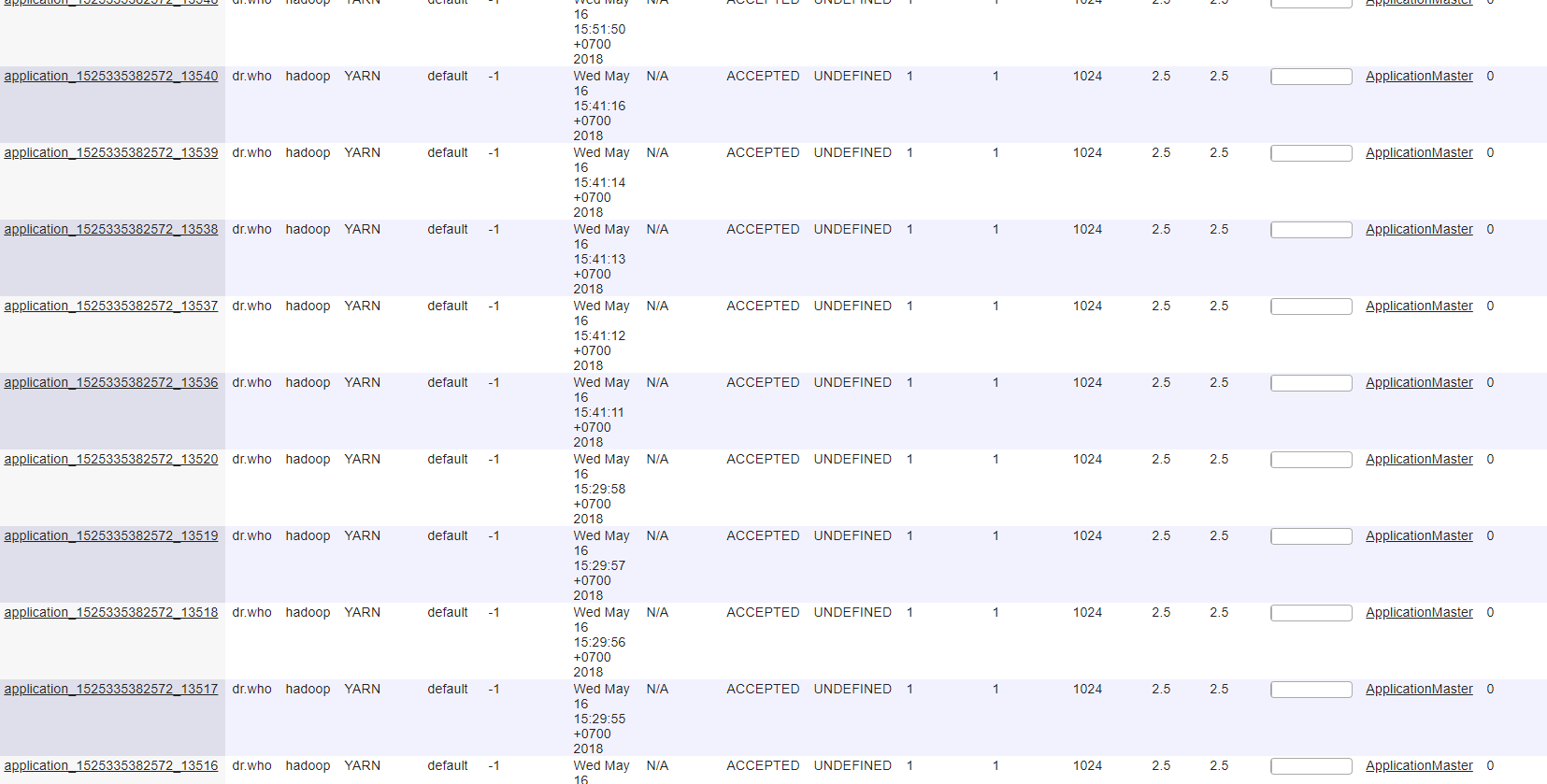

a. If you already see the above problem in your clusters, please filter all applications named “MYYARN” and kill them after verifying that these applications are not legitimately submitted by your own users.

b. You will also need to manually login into the cluster machines and check for any process with “z_2.sh” or “/tmp/java” or “/tmp/w.conf” and kill them.

Hortonworks strongly recommends affected customers to involve their internal security team to find out the extent of damage and lateral movement inside network. The affected customers will need to do a clean secure installation after backup and ensure that data is not contaminated.